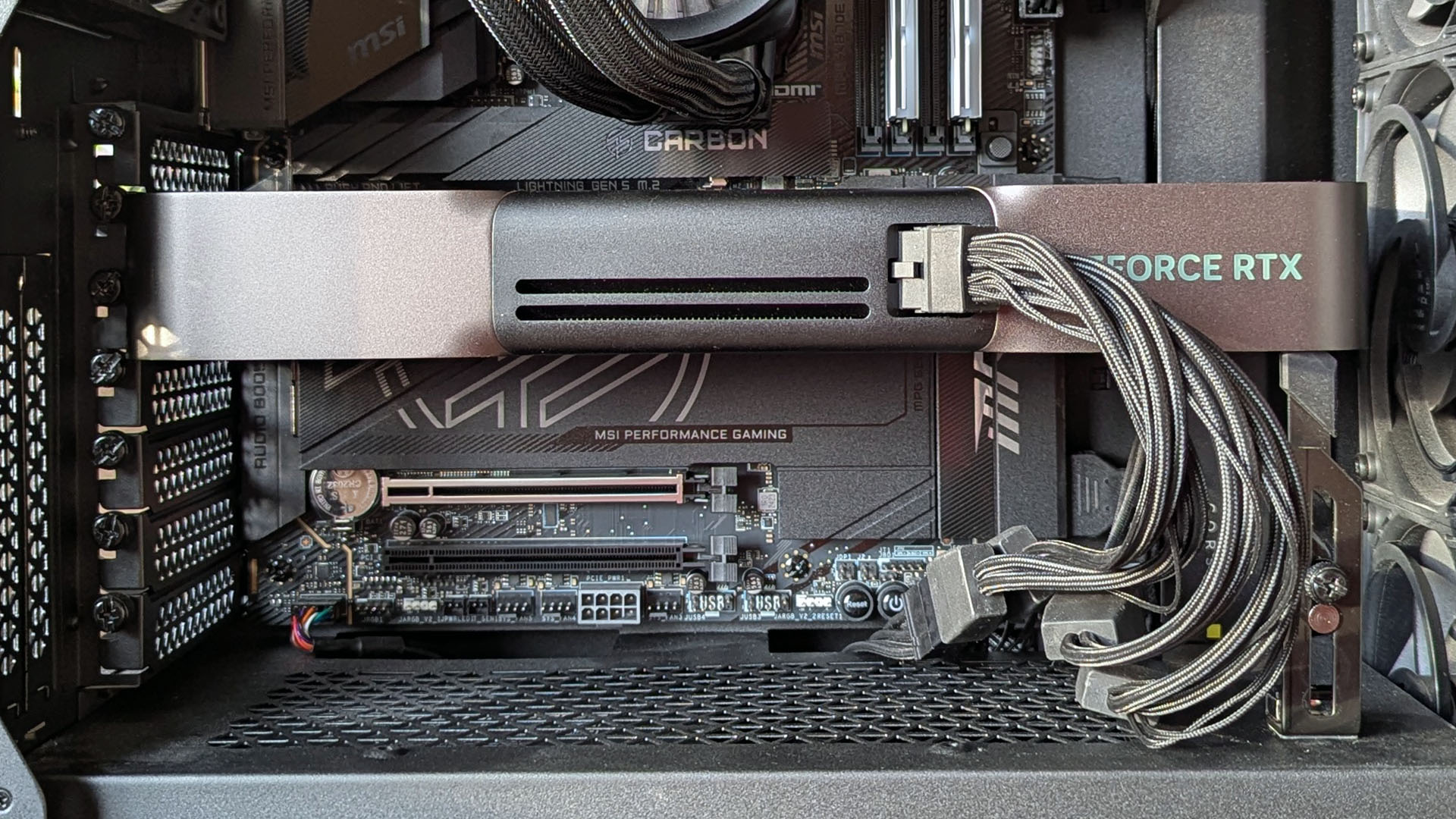

Ollama is one of the easiest ways you can experiment with LLMs for local AI tasks on your own PC. But it does require a dedicated GPU.

However, this is where what you use will differ a little from gaming. For example, you may actually have a better time with local AI using an older RTX 3090 than, say, a newer RTX 5080.

For gaming purposes, the newer card will be the better option. But for AI workloads, the old workhorse has one clear edge. It has more memory.

VRAM is king if you want to run LLMs on your PC

While the compute power of the later generation GPUs may well be better, if you don’t have the VRAM to back it up, it’s wasted on local AI.

An RTX 5060 will be a better GPU for gaming than the old RTX 3060, but with 8GB VRAM on the newer one versus 12GB on the older one, it’s less useful for AI.

When you’re using Ollama, you want to be able to load the LLM entirely into that pool of deliciously fast VRAM to get the best performance. If it doesn’t fit, it’ll spill out into your system memory, and the CPU will start to take up some of the load. When that happens, performance starts to dive.

The exact same principle is true also if you’re using LM Studio instead of Ollama, even if you’re using that with an integrated GPU. You want to have as much memory reserved for the GPU as possible to load up the LLM and keep the CPU from having to kick in.

GPU + GPU memory is the key recipe for maximum performance.

The easiest way to get an idea as to how much VRAM you need is to just see how physically large the model is. On Ollama, gpt-oss:20b, for example, is 13GB installed. You want to have enough memory available to load all of that as a bare minimum.

Ideally, you want to have a buffer, too, as larger tasks and the context windows to fit them will need a buffer to load into as well (the KV cache). Many recommend multiplying the size of the model by 1.2 to get a better ballpark figure as to the VRAM you want to be shooting for.

This same principle applies if you’re reserving memory for the iGPU to use in LM Studio, too.

Without enough VRAM, performance will tank

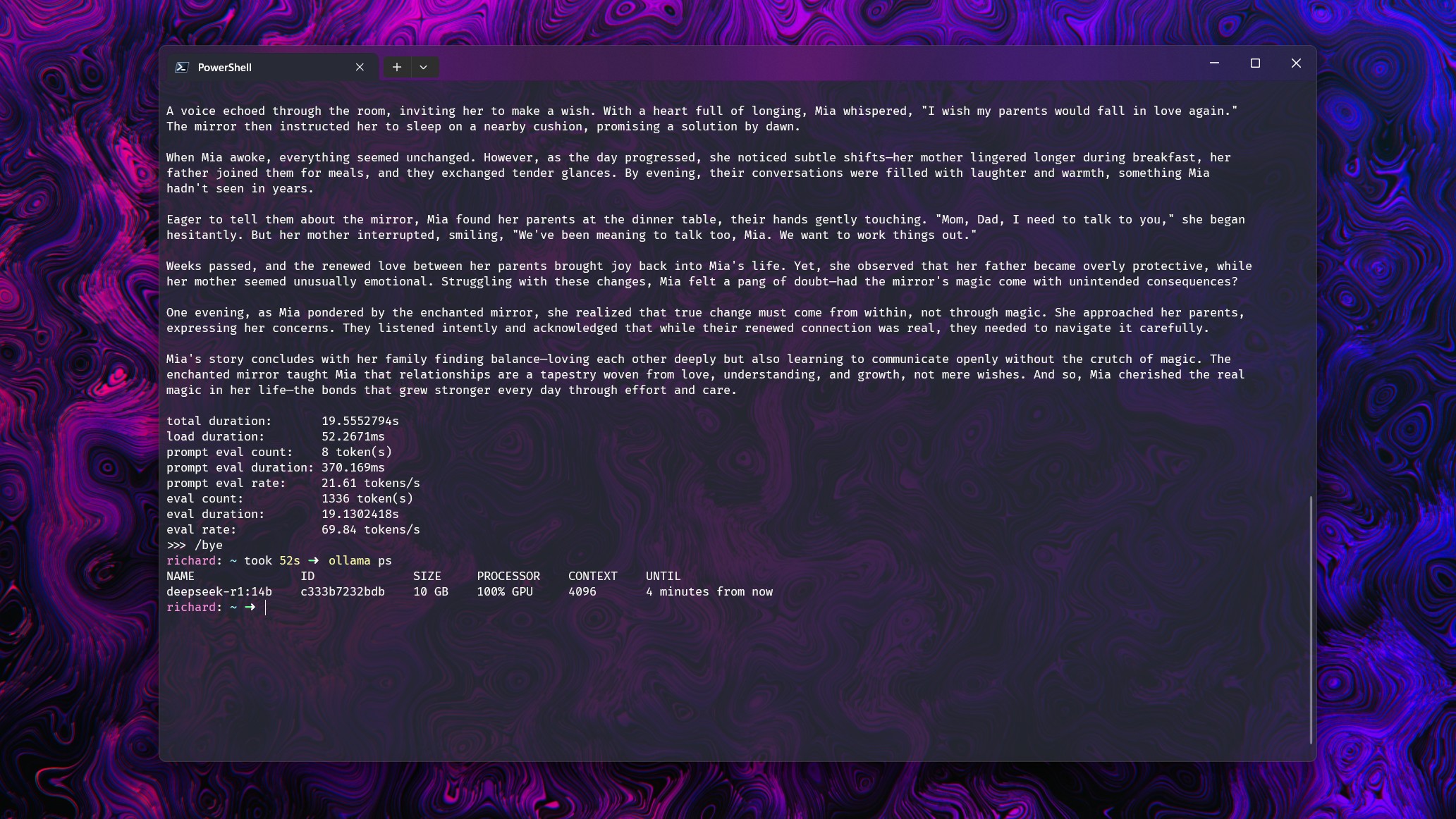

Time for some examples! This is an extremely simple test, but it illustrates the point I’m trying to make. I ran a simple “tell me a short story” prompt on a range of models using my RTX 5080. In each case, all of the models can be loaded entirely into the 16GB VRAM available.

The rest of the system contains an Intel Core i7-14700k and 32GB DDR5 6600 RAM.

However, I increased the context window on each model to force Ollama to have to spread over to the system RAM and CPU as well, to show just how far the performance drops off. So, to the numbers.

- Deepseek-r1 14b (9GB): With a context window of up to 16k GPU usage is 100% at around 70 tokens per second. At 32k there is a split of 21% CPU to 79% GPU, with performance dropping to 19.2 tokens per second.

- gpt-oss 20b (13GB): With a context window of up to 8k GPU usage is 100% at around 128 tokens per second. At 16k there is a split of 7% CPU to 93% GPU with performance dropping to 50.5 tokens per second.

- Gemma 3 12b (8.1GB): With a context window of up to 32k GPU usage is 100% at around 71 tokens per second. At 32k there is a split of 16% CPU to 84% GPU with performance dropping to 39 tokens per second.

- Llama 3.2 Vision (7.8GB): With a context window of up to 16k GPU usage is 100% at around 120 tokens per second. At 32k there is a split of 29% CPU and 71% GPU with performance dropping to 68 tokens per second.

I’ll be the first to say this is not the most in-depth scientific testing of these models. It’s merely to illustrate a point. When your GPU cannot do all of the work itself and the rest of your PC comes into play, the performance of your LLMs will drop quite dramatically.

This is just a very quick test designed to illustrate the importance of having enough available GPU memory to allow your LLMs to perform at their best. You don’t want to have your CPU and RAM picking up the slack wherever possible.

The performance here was still decent when that happened, at least. But that’s with some solid hardware to back up the GPU.

The quick recommendation would be to have 16GB VRAM at least if you want to run models up to gpt-oss:20b. 24GB is better if you need space for more intensive workloads, which you could even get with a pair of well-priced RTX 3060s. You just need to work out what’s best for you.

#VRAM #matters #running #Ollama #Windows